Table of Contents

The use of AI-based applications is playing an increasingly important role in today's world, with new apps emerging in the blink of an eye since ChatGPT was released in late 2019. At this time, there are many AI agents capable of multimodal generation and reasoning. One of the billion-dollar questions that remains is:

How can we integrate those agent models in our applications and harness the power of gen-AI?

First things first

Okay, I know you are looking forward to getting your hands dirty with the code, but we need to understand some initial concepts before going to the technical part. We won't dive deep into advanced machine learning concepts because it's a vast field, and this article could become so long that you might not finish reading it (or lose interest).

What is an LLM?

In general terms, a Large Language Model (LLM) is a type of Artificial Intelligence that can recognize and generate human language and a variety of complex types of data. These technologies can be trained on massive datasets to gain more power through hyper-context.

Hyper-contextualization allows a large language model to know your code, styling, tech stack, frameworks, and more. The proprietary AI Assistant plugged directly into your workspace can better understand how you code and provide more targeted solutions, avoiding waste altogether. — StackSpot Blog

RAG (Retrieval-Augmented Generation)

In a nutshell it's a technique to enhance accuracy and provide reliability to LLM models using knowledge sources (KS) as an extra information to provide more context when generating responses. In the image below you can see how it's work in a simplified view:

Here we ask the AI agent about a specific information, in the first moment the AI model can't help us, because it does not have access to our personal data, but in the second case, when we provide more information through knowledge sources, the AI agent is capable of retrieving and process the correct information based on what data and information we gave it access.

Using StackSpot AI

StackSpot is a holistic platform that provides you with a robust and easier way to manage your projects and get a better developer experience. But in this article, we'll focus on the AI side of the platform, which gives us the ability to enhance our dev productivity with the aid of an AI assistant within our IDEs, even consuming the agent through APIs, as we'll see soon.

If you want to know more about StackSpot, head to the official site.

StackSpot AI is a hyper-contextualized AI agent, that allows you to use your knowledge sources powered by RAG it's capable of extracting information based on your context and giving more accurate answers.

Building the application

As we discussed before, in today's world we have so many options to integrate an AI model in our workflows, for example, building a recommendation system for an e-commerce, chat applications to answer frequently asked questions (FAQ), and so on.

To make things easier to follow along we'll create a simple recommendation system, that communicates with our AI agent through API requests and give answers based on an input from the user, here is the application specifications:

- We will have an e-commerce that sells plants.

- Users can view a list of products and add them to a shop cart.

- In the checkout workflow, users can see a list of cart products and a recommendation list of similar products.

And here is where StackSpot AI will shine! We can use something called: Remote Quick Commands.

Quick Commands (QC) are customizable actions that can be addressed to the AI agent and runs inside an IDE. Also we have Remote Quick Commands (RQC), they run outside an IDE environment, through APIs requests — See more in the official documentation.

Set up AI agent and create RQC

The first thing we need to do is to configure our agent to answer properly based on our needs. You can go to the StackSpot portal and create your own account and follow the steps below.

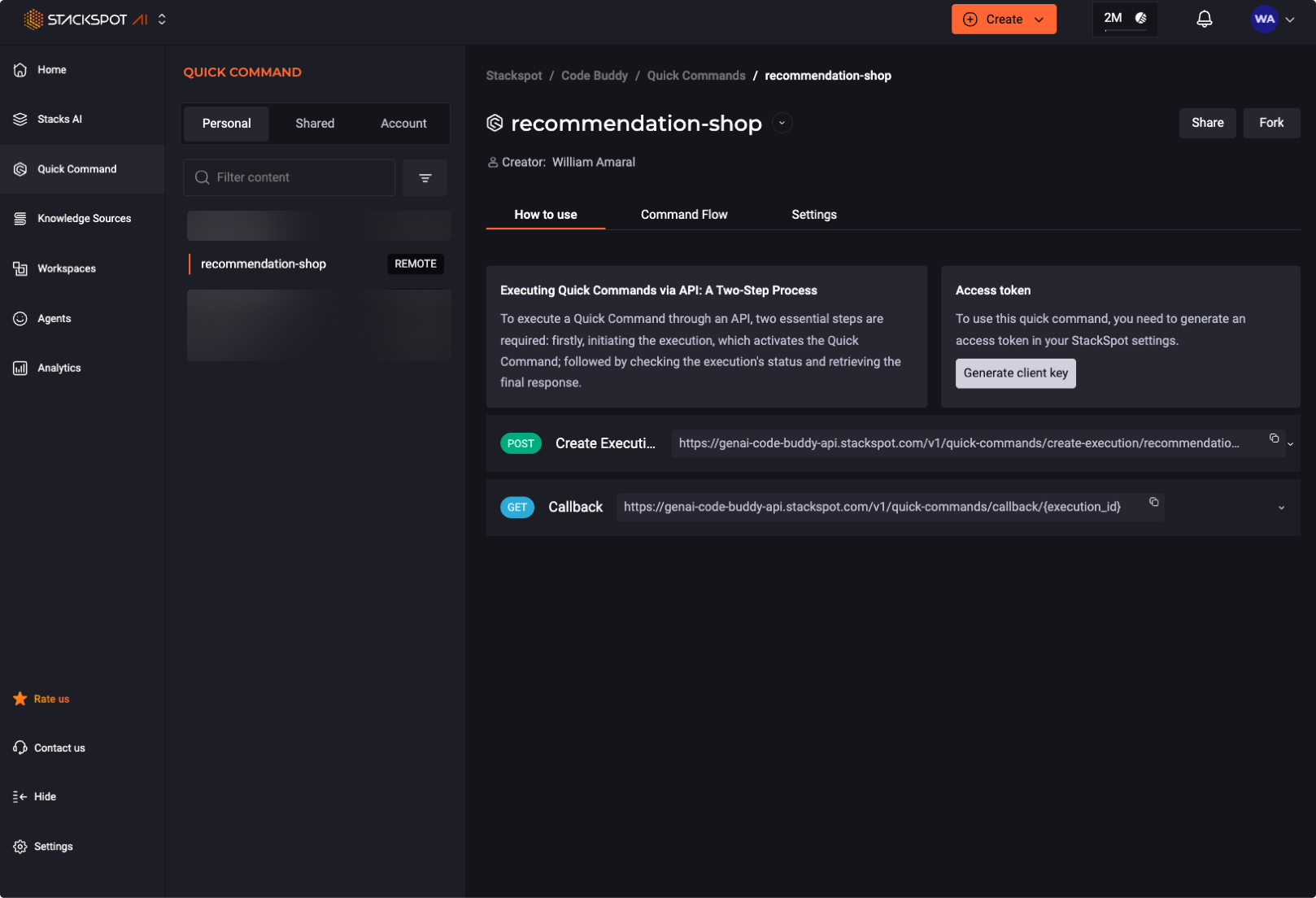

- Create a new Quick Command, select the "Remote" option, give it a name and a description, a slug will be generated at "command" section, leave it as suggested, or you can change it, but remember to use it afterwards to identify your quick command.

- In the next section you can choose between a empty flow or use the "Hello World" template.

- After these quickly steps, you will see the following screen with your recent quick command created.

If you need more details about these steps, head to Create and execute remote quick commands.

Now we need to add a new "Knowledge Source" to provide the necessary context when the system receives a request.

- Go to "Create > Knowledge Source"

- When prompted choose "Custom" as an option, give it a name and a description, mine is: "Plants Database".

- Finally you will be able to add your files.

We will use a JSON to represent our plants database, but in a real scenario you could have anything you want, to give the proper context to the AI agent, such as Open API specs, markdown documents, code snippets, etc.

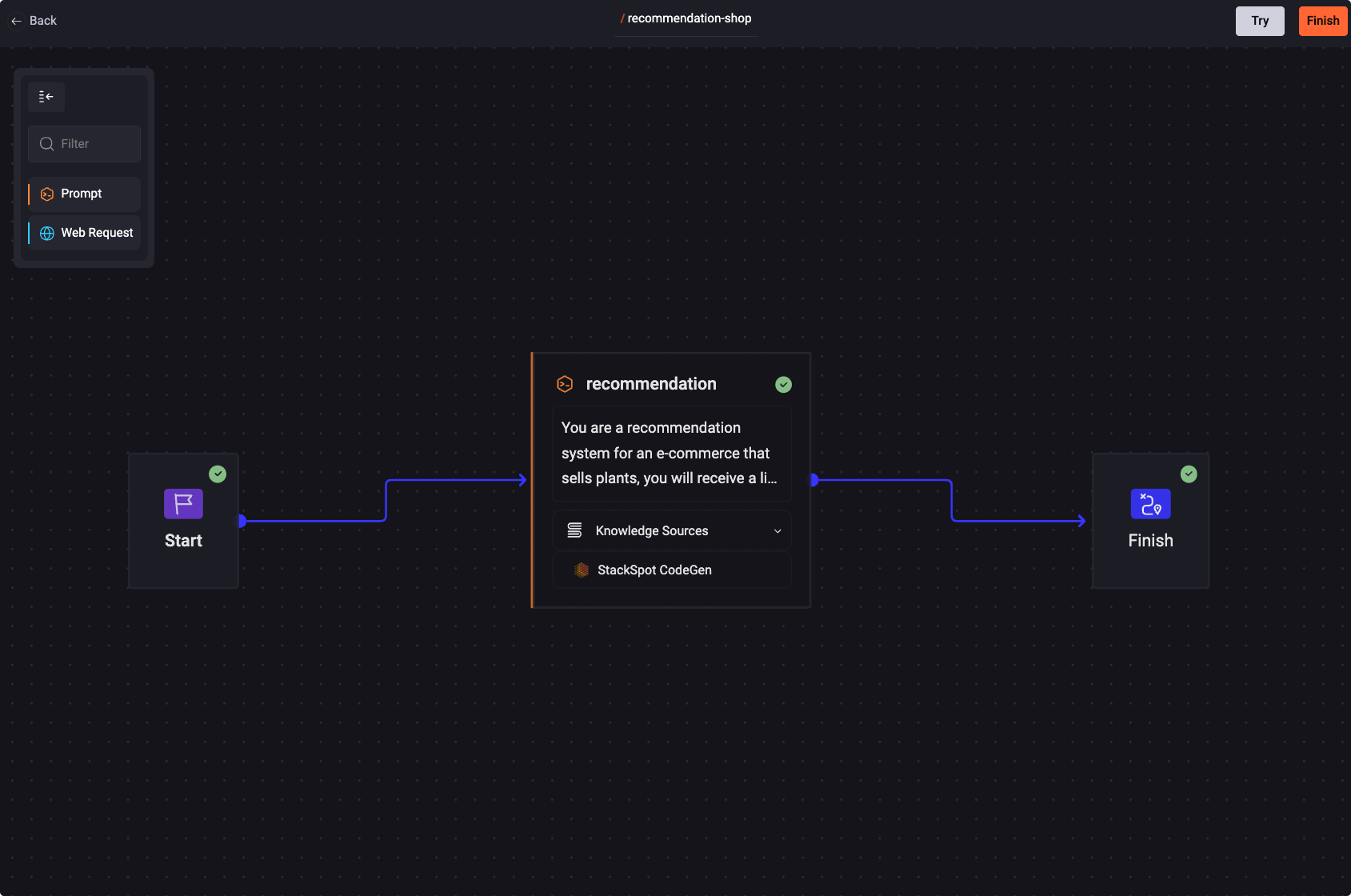

With everything all set, we can proceed to main part and make things happen. Go back to your created QC and open the "Command Flow" tab, we will setup our AI agent with the system prompt and attach the "Plants Database" KS to it.

The screen will look like this:

You can add a new prompt step or a web request, all these steps will be executed when request is received, and you can transform the data as you want. So, we need to add our prompt to instruct the agent, here is a summarized version of it:

You are a recommendation system for an e-commerce that sells plants,

you will receive a list of plants that the user added to the cart,

your task is to use each item's "metadata" section and give two

recommendations based on our list of available plants to sell.

...

Basically we tell all the details the agent need to know about the behavior for "acting" like a recommendation system, it will receive an array of products and need to look at the metadata field of each item to leverage the best matches for the user, based on provided shop list.

Also, we need to add some rules, to the agent answer only what we need and mitigate the chances of hallucinations, take a look:

...

Rules:

- Don't answer anything but the JSON data, without the markdown backticks (e.g. `or`json).

- Follow the existing JSON structure for each item.

- Look up the "metadata" section and use it to leverage the best match given the user's input.

...

Application side

Now with our agent configured, we need to integrate it into our application, we will not see the implementation details, but you can view the full project on GitHub (link at the end of the article).

The recommendation system is a simple flow, we provide the RecommendationService with a product list from the checkout view, and pass it as a body request to the API, who will process and give the best matches for the user, bellow you can see a simplified version of the diagram flow:

All the app's logic is placed into the State Class, as you can see in this piece of code:

@Action(GetRecommendationsAction)

getRecommendations(

{ getState, patchState }: StateContext<RecommendationStateModel>,

{ payload }: GetRecommendationsAction

) {

return this._recommendationService.getRecommendationsByProducts(payload).pipe(

map(({ data }) => data),

map((item) => item.map((e) => new ProductModel().deserialize(e))),

tap((products) => patchState({ products }))

)

}

- We define a function and annotate it with an action called

GetRecommendationsActionthat receives aProductModel[]as a parameter. - When this action was called, it will request the

RecommendationServicefor the similar products. - Then when the API answers it will be serialized into a valid product model class instance.

- Finally we update the application state calling:

patchState({ products }).

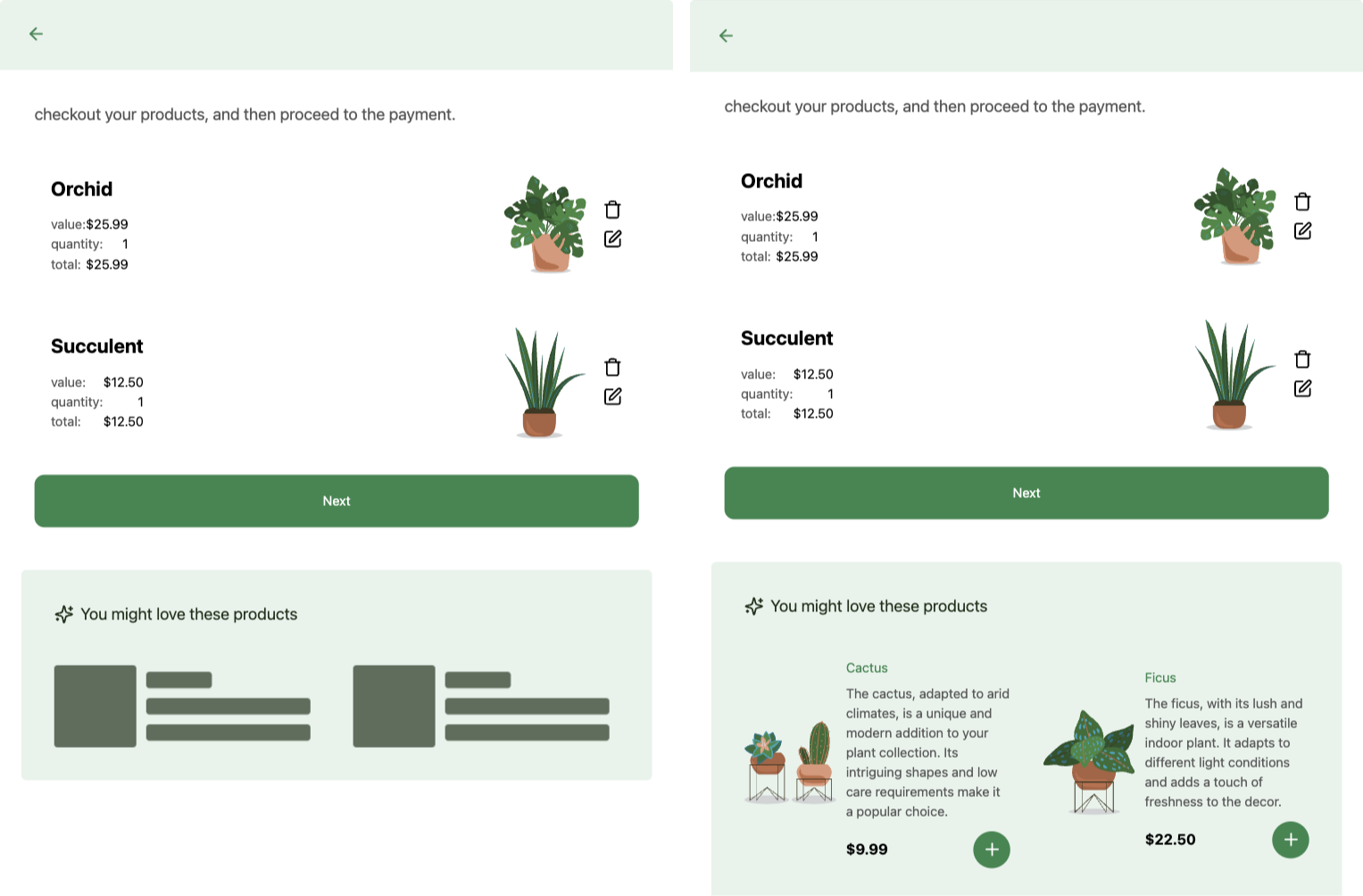

To illustrate how it works in the application, here is a screenshot of the checkout view:

Conclusion

With the power of AI agents, we can elevate our applications to a new level, providing dynamic and rich interactions for users. By using StackSpot AI, you can implement such behaviors not only in applications but also in other areas like a CI/CD pipeline, for example, to automate common tasks — the possibilities are endless.

Last but not least, thank you for being here I see you in the next article :)

Check out the full application here: Ng Shop Workspace — GitHub.